Qwen3 Day0 deployment on Intel OpenVINO™ / Intel OpenVINO™ Day0实现Qwen3快速部署

openlab_96bf3613

更新于 9月前

openlab_96bf3613

更新于 9月前

杨亦诚

Qwen3是阿里通义团队近期最新发布的文本生成系列模型,提供完整覆盖全参数和混合专家(MoE)架构的模型体系。经过海量数据训练,Qwen3在逻辑推理、指令遵循、智能体能力及多语言支持等维度实现突破性提升。而OpenVINO™工具套件则可以帮助开发者快速构建基于LLM的应用,充分利用AIPC异构算力,实现高效推理。

Qwen3, the latest text generation model series released by Alibaba’s Tongyi team, offering a comprehensive suite of dense and mixture-of-experts (MoE) models. Built upon extensive training, Qwen3 delivers groundbreaking advancements in reasoning, instruction-following, agent capabilities, and multilingual support. The OpenVINO™ toolkit empowers developers to rapidly build LLM-based applications, leveraging Intel AIPC heterogeneous computing power for efficient inference.

本文将以Qwen3-8B为例,介绍如何利用OpenVINO™的Python API在英特尔平台(GPU, NPU)Qwen3系列模型。

This article uses [Qwen3-8B] as an example to demonstrate how to deploy the Qwen3 serie***odels in Intel Platforms (GPU, NPU) using OpenVINO™s Python API.

第一步,环境准备: Environment Preparation

基于以下命令可以完成模型部署任务在Python上的环境安****r/>Use the following commands to set up the Python environment for model deployment:python -m venv py_venv

./py_venv/Scripts/activate.bat

pip install --pre -U openvino-genai openvino openvino-tokenizers --extra-index-url https://storage.openvinotoolkit.org/simple/wheels/nig htly

pip install nncf

pip install git+https://github.com/huggingface/optimum-intel.git

pip install transformers >=4.51.3 第二步,模型下载和转换: Model Download and Conversion

在部署模型之前,我们首先需要将原始的PyTorch模型转换为OpenVINO™的IR静态图格式,并对其进行压缩,以实现更轻量化的部署和最佳的性能表现。通过Optimum提供的命令行工具optimum-cli,我们可以一键完成模型的格式转换和权重量化任务:Before deployment, we must convert the original PyTorch model to Intermediate Representation (IR) format of OpenVINO™ and compress it for lightweight deployment and optimal performance. Use the optimum-cli tool to perform model conversion and weight quantization in one step:

optimum-cli export openvino --model Qwen/Qwen3-8B --task text-generation-with-past --weight-format int4 --group-size 128 --ratio 0.8 Qwen3-8B-int4-ov

开发者可以根据模型的输出结果,调整其中的量化参数,包括:

--model: 为模型在HuggingFace上的model id,这里我们也提前下载原始模型,并将model id替换为原始模型的本地路径,针对国内开发者,推荐使用ModelScope魔搭社区作为原始模型的下载渠道,具体加载方式可以参考ModelScope官方指南:https://www.modelscope.cn/doc***odels/download

--weight-format:量化精度,可以选择fp32,fp16,int8,int4,int4_sym_g128,int4_asym_g128,int4_sym_g64,int4_asym_g64

--group-size:权重里共享量化参数的通道数量

--ratio:int4/int8权重比例,默认为1.0,0.6表示60%的权重以int4表,40%以int8表示

--sym:是否开启对称量化

此外我们建议使用以下参数对运行在NPU上的模型进行量化,以达到性能和精度的平衡。

Developers can adjust quantization parameter***ased on model output results, including:

--model: The model ID on HuggingFace. For local models, replace it with the local path. For Chinese developers, ModelScope is recommended for model downloads.***r/>--weight-format: Quantization precision (options: fp32, fp16, int8, int4, etc.).

--group-size: Number of channels sharing quantization parameter****r/>--ratio: int4/int8 weight ratio (default: 1.0).

--sym: Enable symmetric quantization.

For NPU-optimized quantization, use following command:

optimum-cli export openvino --model Qwen/Qwen3-8B --task text-generation-with-past --weight-format nf4 --sym --group-size -1 Qwen3-8B-nf4-ov --backup-precision int8_sym 第三步,模型部署: Model Deployment

OpenVINO™目前提供两种针对大语言模型的部署方案,如果您习惯于Transformers库的接口来部署模型,并想体验相对更丰富的功能,推荐使用基于Python接口的Optimum-intel工具来进行任务搭建。如果您想尝试更极致的性能或是轻量化的部署方式,GenAI API则是不二的选择,它同时支持Python和C++两种编程语言,安装容量不到200MB。OpenVINO™ currently offers two deployment methods for large language models (LLMs). If you are accustomed to deploying models via the Transformers library interface and seek richer functionality, it is recommended to use the Python-based Optimum-intel tool for task implementation. For those aiming for peak performance or lightweight deployment, the GenAI API is the optimal choice. It support***oth Python and C++ programming languages, with an installation footprint of less than 200MB.

OpenVINO™ offers two deployment approaches for large language models:

Optimum-intel部署示例

Optimum-intel Deployment Example

from optimum.intel.openvino import OVModelForCausalLM

from transformers import AutoConfig, AutoTokenizer

ov_model = OVModelForCausalLM.from_pretrained(

llm_model_path,

device='GPU',

)

tokenizer = AutoTokenizer.from_pretrained(llm_model_path)

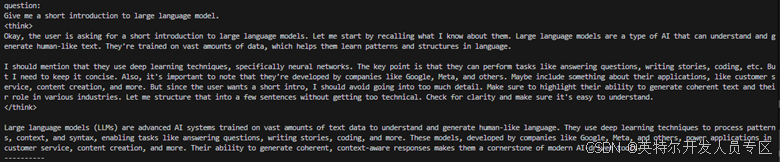

prompt = "Give me a short introduction to large language model."

messages = [{"role": "user", "content": prompt}]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

enable_thinking=True

)

model_inputs = tokenizer([text], return_tensors="pt")

generated_ids = ov_model.generate(**model_input****ax_new_tokens=1024)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

try:

index = len(output_ids) - output_ids[::-1].index(151668)

except ValueError:

index = 0

thinking_content = tokenizer.decode(output_ids[:index], skip_special_tokens=True).strip("\n")

content = tokenizer.decode(output_ids[index:], skip_special_tokens=True).strip("\n")

print("thinking content:", thinking_content)

print("content:", content)

GenAI API部署示例

GenAI API Deployment Example

import openvino_genai as ov_genai

generation_config = ov_genai.GenerationConfig()

generation_config.max_new_tokens = 128

generation_config.apply_chat_template = False

pipe = ov_genai.LLMPipeline(llm_model_path, "GPU")

result = pipe.generate(prompt, generation_config) 这里可以修改device name的方式将模型轻松部署到NPU上。

To deploy the model on NPU, you can replace the device name from “GPU” to “NPU”.

pipe = ov_genai.LLMPipeline(llm_model_path, "NPU")

当然你也可以通过以下方式实现流式输出。

To enable streaming mode, you can customize a streamer for OpenVINO GenAI pipeline.

def streamer(subword):

print(subword, end='', flush=True)

sys.stdout.flush()

return False

pipe.generate(prompt, generation_config, streamer=streamer)

此外,GenAI API提供了chat模式的构建方法,通过声明pipe.start_chat()以及pipe.finish_chat(),多轮聊天中的历史数据将被以kvcache的形态,在内存中进行管理,从而提升运行效率。

Additionally, the GenAI API provides a chat mode implementation. By invoking pipe.start_chat() and pipe.finish_chat(), history data from multi-turn conversations i***anaged in memory as kvcache, which can significantly boost inference efficiency.

Chat模式输出结果示例:

Output of Chat mode:

pipe.start_chat()

while True:

try:

prompt = input('question:\n')

except EOFError:

break

pipe.generate(prompt, generation, streamer)

print('\n----------')

pipe.finish_chat() 总结:Conclusion

可以看到,不管是利用Optimum-intel或是OpenVINO GenAI API,我们都可以非常轻松地将转换后的Qwen3模型部署在Intel的GPU,NPU等硬件平台上,从而进一步在本地构建起各类基于LLM的服务和应用。Whether using Optimum-intel or OpenVINO™ GenAI API, developers can effortlessly deploy the converted Qwen3 model on Intel hardware platforms, enabling the creation of diverse LLM-based services and applications locally.

参考资料

llm-chatbot notebook: https://github.com/openvinotoolkit/openvino_notebooks/tree/latest/notebooks/llm-chatbot

GenAI API: https://github.com/openvinotoolkit/openvino.genai

Reference:

llm-chatbot notebook: https://github.com/openvinotoolkit/openvino_notebooks/tree/latest/notebooks/llm-chatbot

GenAI API: https://github.com/openvinotoolkit/openvino.genai